Sitemap

A list of all the posts and pages found on the site. For you robots out there, there is an XML version available for digesting as well.

Pages

Posts

Future Blog Post

Published:

This post will show up by default. To disable scheduling of future posts, edit config.yml and set future: false.

Blog Post number 4

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Blog Post number 3

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Blog Post number 2

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

Blog Post number 1

Published:

This is a sample blog post. Lorem ipsum I can’t remember the rest of lorem ipsum and don’t have an internet connection right now. Testing testing testing this blog post. Blog posts are cool.

portfolio

Portfolio item number 1

Short description of portfolio item number 1

Portfolio item number 2

Short description of portfolio item number 2

publications

Core-periphery detection based on masked Bayesian nonnegative matrix factorization

Published in IEEE Transactions on Computational Social Systems (IEEE TCSS), 2024

Core–periphery structure is an essential mesoscale feature in complex networks. Previous researches mostly focus on discriminative approaches, while in this work we propose a generative model called masked Bayesian nonnegative matrix factorization. We build the model using two pair affiliation matrices to indicate core–periphery pair associations and using a mask matrix to highlight connections to core nodes. We propose an approach to infer the model parameters and prove the convergence of variables with our approach. Besides the abilities as traditional approaches, it is able to identify core scores with overlapping core–periphery pairs. We verify the effectiveness of our method using randomly generated networks and real-world networks. Experimental results demonstrate that the proposed method outperforms traditional approaches.

Recommended citation: Wang, Zhonghao, et al. "Core–periphery detection based on masked Bayesian nonnegative matrix factorization." IEEE Transactions on Computational Social Systems 11.3 (2024): 4102-4113.

Download Paper | Download Bibtex

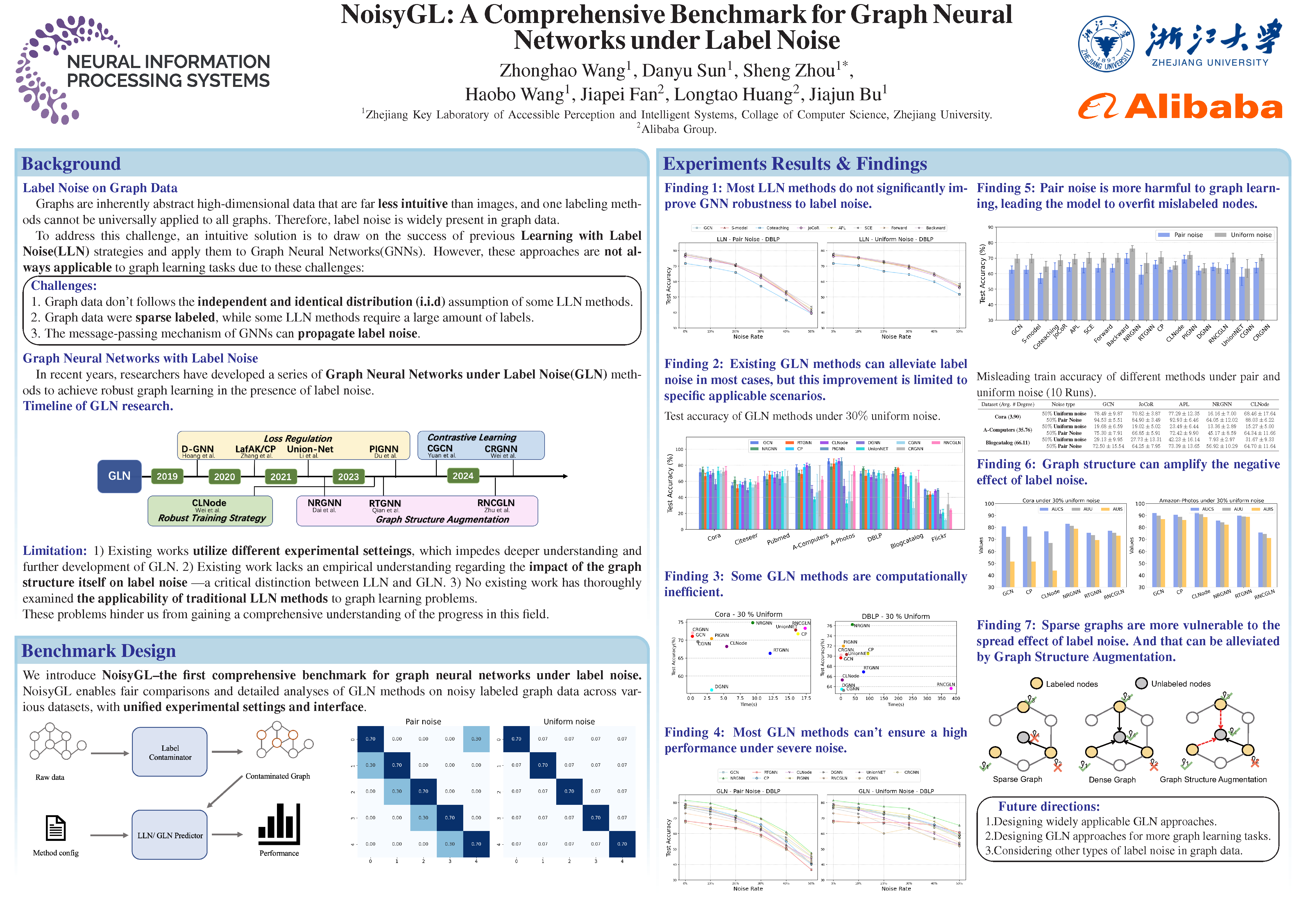

NoisyGL: A Comprehensive Benchmark for Graph Neural Networks under Label Noise

Published in Advances in Neural Information Processing Systems 37 (NeurIPS 2024), 2024

Graph Neural Networks (GNNs) exhibit strong potential in node classification task through a message-passing mechanism. However, their performance often hinges on high-quality node labels, which are challenging to obtain in real-world scenarios due to unreliable sources or adversarial attacks. Consequently, label noise is common in real-world graph data, negatively impacting GNNs by propagating incorrect information during training. To address this issue, the study of Graph Neural Networks under Label Noise (GLN) has recently gained traction. However, due to variations in dataset selection, data splitting, and preprocessing techniques, the community currently lacks a comprehensive benchmark, which impedes deeper understanding and further development of GLN. To fill this gap, we introduce NoisyGL in this paper, the first comprehensive benchmark for graph neural networks under label noise. NoisyGL enables fair comparisons and detailed analyses of GLN methods on noisy labeled graph data across various datasets, with unified experimental settings and interface. Our benchmark has uncovered several important insights that were missed in previous research, and we believe these findings will be highly beneficial for future studies. We hope our open-source benchmark library will foster further advancements in this field. The code of the benchmark can be found in https://github.com/eaglelab-zju/NoisyGL.

Recommended citation: Wang, Zhonghao, et al. "NoisyGL: A Comprehensive Benchmark for Graph Neural Networks under Label Noise." In Proceedings of the Advances in Neural Information Processing Systems 37 (2024): 38142-38170.

Download Paper | Download Bibtex

Learning from Graph: Mitigating Label Noise on Graph through Topological Feature Reconstruction

Published in 34th ACM International Conference on Information and Knowledge Management (CIKM 2025), 2025

Graph Neural Networks (GNNs) have shown remarkable performance in modeling graph data. However, Labeling graph data typically relies on unreliable information, leading to noisy node labels. Existing approaches for GNNs under Label Noise (GLN) employ supervision signals beyond noisy labels for robust learning. While empirically effective, they tend to over-reliance on supervision signals built upon external assumptions, leading to restricted applicability. In this work, we shift the focus to exploring how to extract useful information and learn from the graph itself, thus achieving robust graph learning. From an information theory perspective, we theoretically and empirically demonstrate that the graph itself contains reliable information for graph learning under label noise. Based on these insights, we propose the Topological Feature Reconstruction (TFR) method. Specifically, TFR leverages the fact that the pattern of clean labels can more accurately reconstruct graph features through topology, while noisy labels cannot. TFR is a simple and theoretically guaranteed model for robust graph learning under label noise. We conduct extensive experiments across datasets with varying properties. The results demonstrate the robustness and broad applicability of our proposed TFR compared to state-of-the-art baselines. Codes are available at https://github.com/eaglelab-zju/TFR.

Recommended citation: Wang, Zhonghao, et al. "Learning from Graph: Mitigating Label Noise on Graph through Topological Feature Reconstruction" In Proceedings of the 34th ACM International Conference on Information and Knowledge Management (CIKM ’25)

Download Paper

talks

NoisyGL: A Comprehensive Benchmark for Graph Neural Networks under Label Noise

Published:

This is a presentation of our paper NoisyGL: A Comprehensive Benchmark for Graph Neural Networks under Label Noise at NeurIPS 2024. You can find the slides here and the paper here. And here is the video of the presentation: NoisyGL: A Comprehensive Benchmark for Graph Neural Networks under Label Noise.

Learning from Graph: Mitigating Label Noise on Graph through Topological Feature Reconstruction

Published:

This is a presentation of our paper Learning from Graph: Mitigating Label Noise on Graph through Topological Feature Reconstruction at CIKM 2025. You can find the paper here.

FAQ: Feature noise and Structure Noise are common in graph learning scenarios. Is TFR equally robust to these types of noise?

Answer: No, TFR is specifically optimized for label noise and has not been optimized for Feature noise or Structure Noise. However, we must admit that feature, structure, and label noise are all common issues in real-world scenarios. We believe designing a model that is robust to all of these types of noise is a crucial direction for future work. Of course, when every part of the graph data contains severe noise, a more efficient approach is to address the problem from a data perspective (e.g., re-cleaning the data to improve data quality)

teaching

Teaching experience 1

Undergraduate course, University 1, Department, 2014

This is a description of a teaching experience. You can use markdown like any other post.

Teaching experience 2

Workshop, University 1, Department, 2015

This is a description of a teaching experience. You can use markdown like any other post.